This Week In Veloren 134

This week, we see the plans for the 0.11 release. RTSim sees some optimizations, and we examine the situation with CI runners.

- AngelOnFira, TWiV Editor

Contributor Work

Thanks to this week's contributors, @xMAC94x, @XVar, @Sam, @imbris, @ubruntu, and @flo.

@XVar added a small utility cargo cmd-doc-gen that auto-generates documentation of the in-game commands for the book: https://book.veloren.net/players/commands.html. You can read this week's meeting notes here.

0.11 Release Prep by @xMAC94x

The 0.11 release will be on Saturday, 2021-09-11, at 18:00 GMT. As usual, there will be a feature freeze starting from 2021-09-04 18:00 GMT. We recommend submitting critical and large MRs for review now, before the feature freeze.

This release will also have a stress test event between the feature freeze and the release. We will be sharing further details of this later. Getting your large MR merged before feature freeze Please mention @xMAC94x in the #ReleaseMRs_0.11 thread (in programmers) with a short summary with the following details (the more, the better):

- What is complete

- What needs to be done

- Can your feature be partially introduced to master in its current state (as multiple MRs)?

- Do you foresee any issues with your MR and if so, which ones?

- Why should this feature be in 0.11?

This is required to align our testing and review strategies to ensure a smooth release.

2021-09-04 18:00:00 GMT Feature freeze — No new feature MRs will be merged.

2021-09-06 18:00:00 GMT Stress test event.

2021-09-10 10:00:00 GMT Total freeze — no new merges will be introduced unless they're critical.

2021-09-10 16:00:00 GMT Release build will be compiled.

2021-09-11 12:00:00 GMT Main server hardware upgrade.

2021-09-11 18:00:00 GMT Release party!

RTSim optimizations by @ubruntu

I started working on making RTSim entities update in smaller groups because I saw a TODO comment in the code and got nerd sniped into trying to find a solution. The idea is that there are NPC entities that only exist when they are in a chunk that is loaded and in view of a player, and there are also RTSim entities that continue to simulate behavior when they are far away from players in unloaded chunks.

Originally, every RTSim entity was being simulated during every tick, and that didn't have to be the case. It's easier to tell something to move 10 feet once than to tell it 5 times to move 2 feet, which means during each tick we don't have to tell as many entities to update their position. The trade-off is that the precision of movement for simulated entities can suffer when too much time elapses between their updates, so they could for example run past the town they were trying to reach.

RTSim entities that are unloaded will update once every 30 server ticks, which I believe is slightly more than once per second. RTSim entities that are loaded in view of players are still updated every server tick so their pathfinding and general behavior shouldn't look noticeably different to players. In order to keep loaded and unloaded entities in sync we need to store the time (as an f64) that the entity was last updated, and use that to calculate the delta time which is then needed to calculate simulated travel speed.

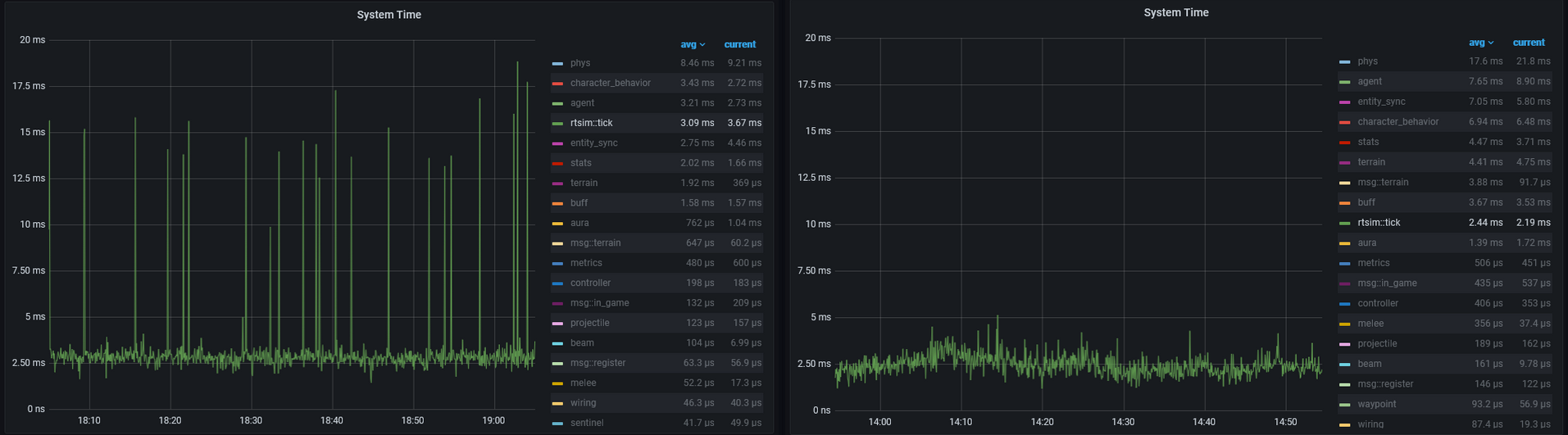

I'm satisfied with the solution because it takes advantage of the monotonically increasing tick number that was already there and some iterator methods found in the standard library. No new dependencies were needed and relatively few lines of code were added to achieve some performance gains. Thanks to @zesterer and @xMAC94x for helping me understand RTSim and timing-related things that the solution needed to take into account. RTSim tick performance before the change on the left, and after the change on the right (the server was also busier).

Fixing Veloren's CI Runner Shortage by @XVar

This writeup was originally created as a document for the Meta team to look into.

Introduction

Recently, Veloren has been suffering from both a lack of available GitLab CI runners, and quite often even when a runner is available, it has insufficient resources and may fail jobs due to the child processes of the build being OOM-killed by the host OS kernel. As of the time of writing, we have only 3 CI runners (+1 dedicated MacOS runner). This document aims to determine what the baseline resource requirements for a Veloren CI runner should be, and to propose a route forwards.

Performance Benchmarking

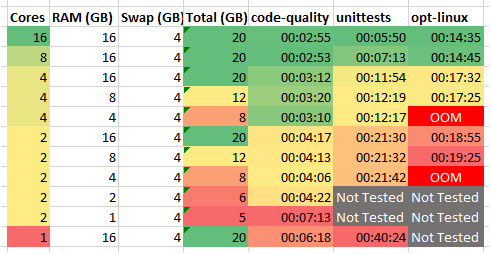

In order to determine the effect that different numbers of CPU cores and available RAM have on build times, a VMWare virtual machine was used to run three different build jobs from the master branch. The host machine used has an AMD Ryzen 3900X and 32GB RAM. The numbers presented below will be affected by the single-threaded performance of the host machine of a given runner so not much meaning should be taken from the absolute values, but rather the relative difference between different resource configurations.

The first line in the table represents the base-line performance for a high-spec machine with a large amount of memory. With 16GB available, swap was not used at all during any of the builds in this configuration.

CPU Cores

Adding additional CPU cores appears to have diminishing returns for numbers greater than 8, however when less than 4 cores are available, performance takes a significant hit with the unittests job taking almost twice as long when the number of cores were reduced from 4 to 2. As is to be expected, further reducing the number of cores from 2 to 1 results in a 50% increase in duration for the code-quality job and a 100% increase in duration for the unittests job.

Memory

The RAM requirements for a runner vary based on the particular job, with those that don’t involve linking executables requiring much less RAM. The code-quality and unittests jobs use around 2GB of RAM and only really suffered when physical memory was reduced to 1GB which meant the half of the memory for the job was provided by swap.

The opt-linux job however requires a significant amount of memory - around 11GB at peak. Attempting to run the job with less than 12GB available memory (physical+swap) results in the runner jobs being OOM-killed by the kernel, and the build failing.

As is to be expected, additional available memory above the amount required for a given job does nothing to improve performance since the excess memory remains unused.

Security and role separation of runners

MR 2537 was merged on 20/08/2021 that introduces more granular runner tags to the Veloren CI configuration. One of the primary motivations for this work was to allow us to use runners provided by 3rd parties and contributors without this being a security risk. For example, a rogue runner that is tasked with executing the job that publishes artifacts for distribution via AirShipper could in theory modify the binary before it’s uploaded back to GitLab to include malicious code (a supply-chain attack).

With this change in, we can freely accept runners from anyone who wishes to provide them even if they aren’t personally known by anyone on the project.

Recommendation

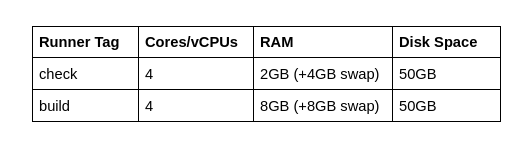

Minimum runner specification

Given the above results, it is clear that the resource requirements for a runner differ based on the job being run. Therefore there are two different minimum requirements proposed, depending on whether the runner is configured with just the check tag or also with the build tag.

While a minimum physical amount of RAM is listed, it is ideal for the total amount of required memory to be available to avoid using swap at all.

Despite the fact that less than 4 cores would work for a runner, having runners with any fewer causes unnecessary delays for CI jobs and inconvenience for developers.

If any of our current CI runners have less than 4 cores available, they should be considered for retirement should we meet our target number of runners in each category.

Target runner quantity

We should aim to have at least 8 CI runners of the above specification available to ensure prompt processing of CI jobs, with at least 4 with the build tag and at least 2 of those being trusted to process publish jobs. There is no need for an upper limit on runners, the more we have the more contingency we have against runners being retired.

Next Actions

If there is agreement on the above, we should advertise that we need runners, perhaps with a ping to @DevPingSquad or @Contributor and a section in the blog until we have enough.

If we have any unused resource available on our server infrastructure, we might consider hosting runners on it since the CPU/memory is otherwise sitting idle.

Since this writeup was created, @AngelOnFira has added 10 runners worth of compute, and @Sam has added 2. This resolves the situation for the time being.

An early morning glide. See you next week!