This Week In Veloren 77

This week we hear about @lobster's progress on particles. @AngelOnFira discusses multithreading gamedev.

- AngelOnFira, TWiV Editor

Contributor Work

Thanks to this week's contributors, @AngelOnFira, @Sharp, @Pfau, @imbris, @Menko1437, @zesterer, @Slipped, @Sam, @xMAC94x, @T-Dark0, @yusdacra, @Songtronix, @lausec, and @PatatasDelPapa!

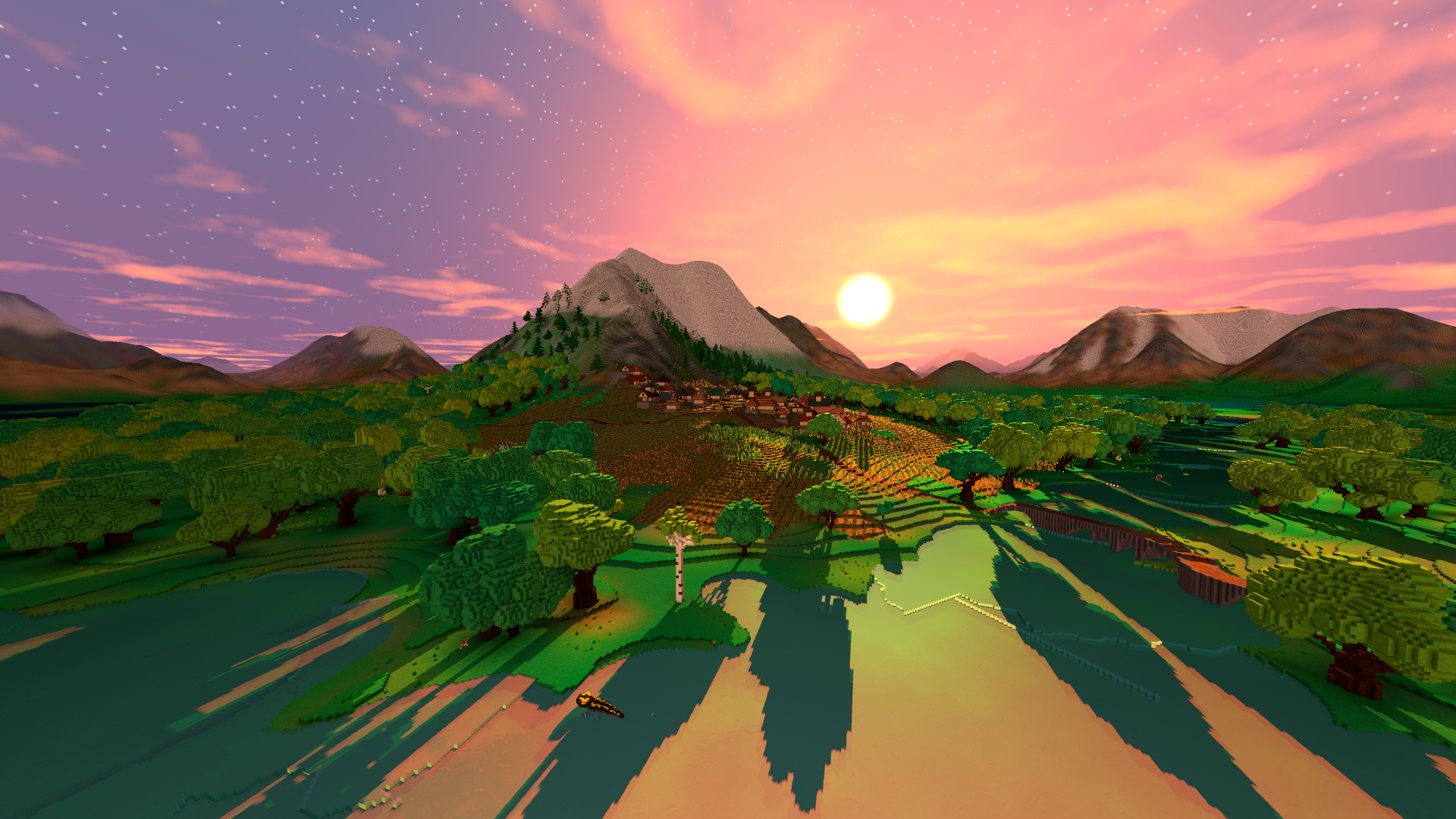

@Songtronix has been doing lots of work on Airshipper. He also ran the first UX working group meeting! @YuriMomo has been doing lots of UX work. @Felixader has been working on the city, town, and village descriptions for the brushwood elves. @imbris merged the winit upgrade, which consisted of a monumental amount of work. Beginning of the fishing animation by @Snowram

@XVar is working on an RFC proposal with @Sharp to improve how persistence works. Primarily the goal is to figure out how to deserialize items from inventories and loadouts. @lausec improved some German translations and fixed a bug where you could mount pets from very far away. They're also working on allowing admins to join a server even if they aren't on the whitelist. @Gemu modeled around 50 new axes and hammers to go with more diverse weapon drops.

Particle Improvements by @lobster

@lobster is still working on improving particles. Recently, they scrapped the particle emitter component, because particles are a visual representation of the already existing game state. It's better to use the existing game state than to create an unnecessary duplicate state. It would have eventually lead to state desynchronization bugs, as well as a waste of memory.

@lobster also scrapped the configurable particle idea, because of performance concerns with uploading data to the GPU. Instead, we upload the bare minimum for the particle instance to function, in addition to some particles having different context dependencies. There isn't a nice way to have a generic config that will allow for use of those dependencies in any meaningful way. Instead, we can write shaders, which are hot-loadable and more flexible because they allow for data values like a config to be able to also have expressions, statements, and use the context dependencies that are passed in etc. Basically, it's the same as animation code, except instead of bones, there are vertices, instances, and fragments. GLSL instead of Rust.

CPU Workloads by @AngelOnFira

Earlier this week I saw a post on /r/opensource asking if a piece of software could distribute single core games over multiple core. I wrote this long-winded reply.

I think this is actually quite a good question. The short answer is no, a piece of software is bound to how it handles computation. For a longer answer, I should mention that although I've been exposed to some of these topics, I haven't implemented them myself, and so I'm open to corrections or further details. My experience comes from an open source game I'm contributing to, Veloren. The programming language we use, Rust, allows us to make quite good use of multiple CPU cores to their full capabilities.

First, it's important to know why it's difficult for code to work on different cores, or threads, or more fundamentally, processes. The key factor this comes down to is the idea of "thread safety". At the ground level, many larger program architectures break down to how the variables manipulated in them. If I have a variable, and I allow multiple pieces of running code to access it, then I'm very likely to make mistakes with how this variable is used. There are a few questions to be asked about this:

- If a thread deleted this variable, do other threads know that it was deleted?

- Did a thread read the variable before another thread updated it? If so, was that the right order that I wanted?

- What if one thread overwrites an update that another thread just made to it?

These questions are not easy to answer without guarantees, like the ones that Rust provides:

- If multiple threads try to have "ownership" of a variable at the same time, the program won't compile.

- Once the "owner" of a variable goes out of scope, that variable is also deleted. No one else except the owner would expect it to be still there.

Further, there are some other ways that Rust helps you:

- Variables are immutable by default. This means that if you don't explicitly allow a variable to be changed, you can be sure that it won't change under your nose, and that you can rely on it being the same.

When a game is only using 1 thread, it doesn't have to worry about any of this. Will anyone change this variable before I use it next? No, because only I can change it. What if the variable is deleted? Well, that's my fault and no one else's.

This next section is purely speculation on my part, I don't have any industry experience.

Next, why don't AAA games make use of these extra cores? A primary reason is that the engines these games uses are often very large and have many moving parts that prevent them from changing their core architecture. It would require a fundamental overhaul to introduce functionality that would allow it to distribute the workload to multiple threads. Further, lots of research into distributed and parallel computing was still going on while these engines were being created.

On top of this, AAA games are quite used to working within hardware constraints. This is because when you are leading a game's development, you need to explicitly tell each team how much compute or graphics processing time they are allowed to use each frame. For AAA games on consoles, this is even more clear. Each PS4 has the exact same CPU and GPU, so you can be sure that if you run the game at the required 30 fps, anyone else will be able to as well. Back when these engines were being created for PC games, many computers only had one core, one thread. If you're suddenly telling these teams that they have double the compute power across two threads, or maybe 4 times on a different CPU, it wouldn't really change the engine that has to cooperate with consoles.

Finally, many games are not actually CPU bound. It is often the graphics card that is the limiting factor. Some tasks are best suited for each type of processing. For example, rendering lighting at each pixel is great for a GPU that has thousands of "dumb" cores. AI pathfinding that requires a lot of "smarter" compute is best on CPU. This doesn't hold true for all games, but it's much easier to improve algorithm runtime for CPU tasks than it is for GPU, which intrinsically has to do work on 1920*1080 = 2073600 pixels each frame.

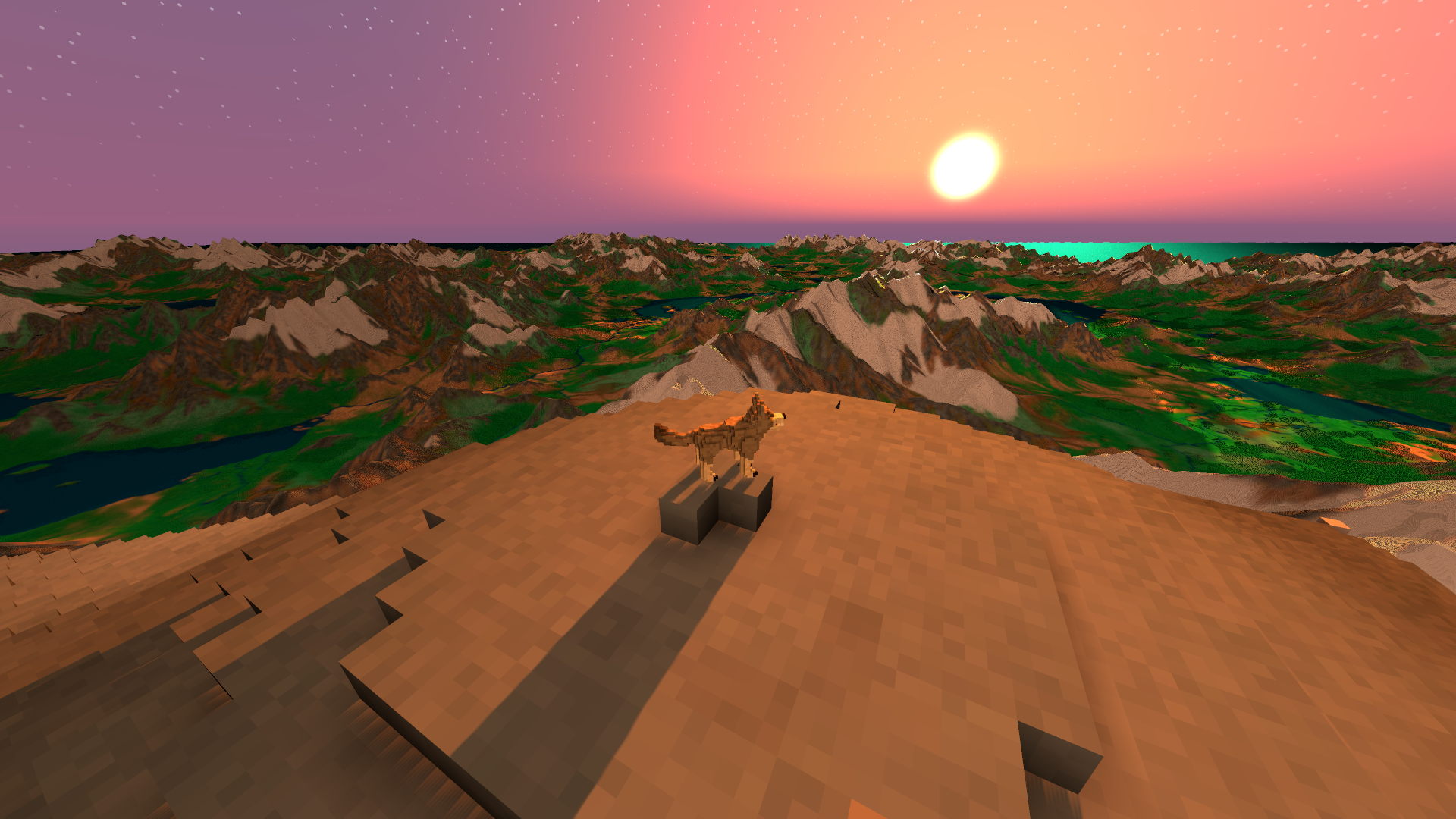

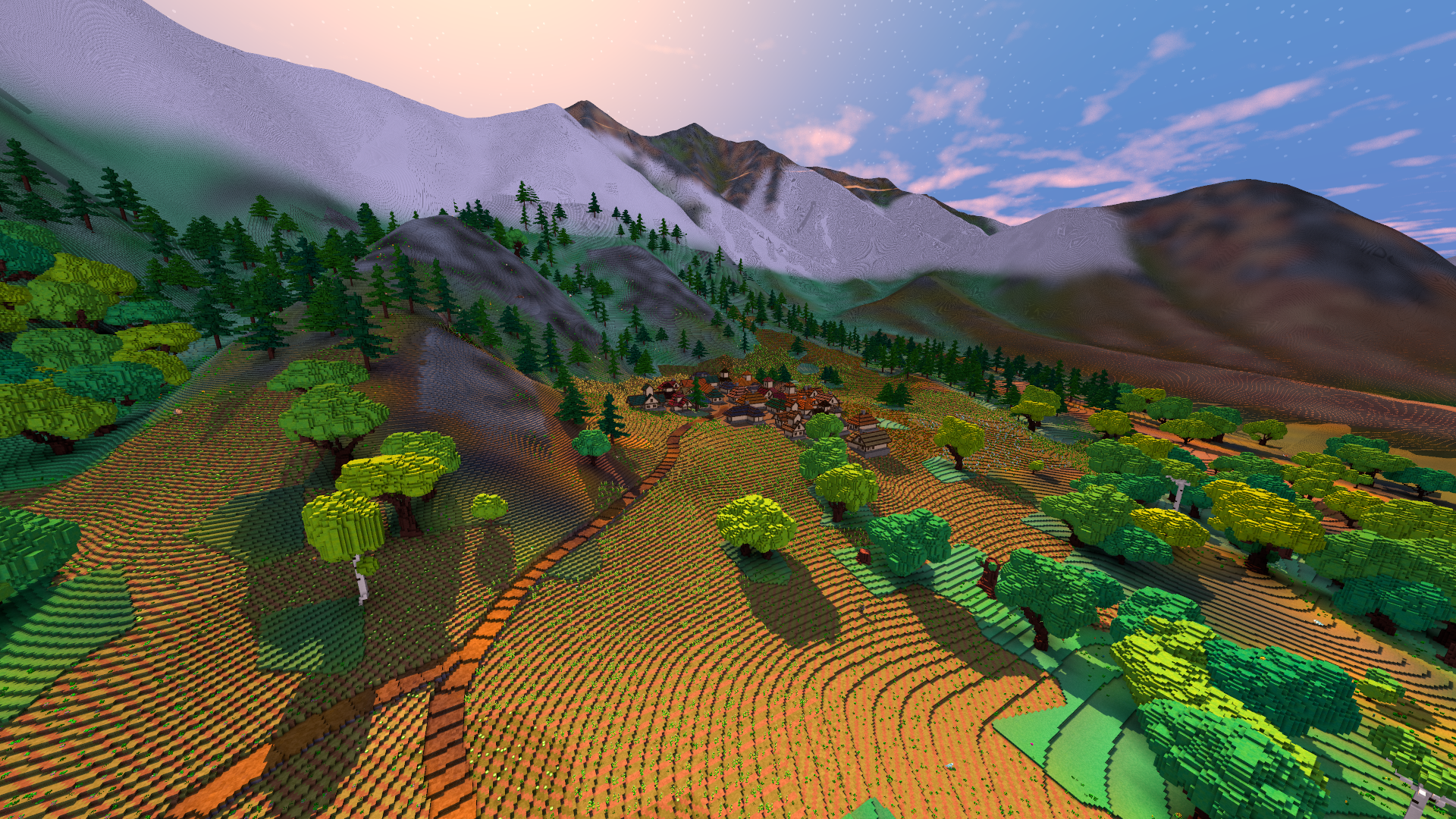

Anyways, I hope that most of this is correct and helpful. I'll send it to my team as well and add any edits if there is anything that is very out of place. Many mountains to explore. See you next week!